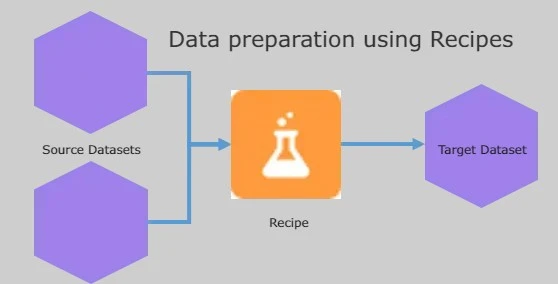

Data preparation using Recipes

Data preparation using Recipes:

This can be good news to admins who have yet to learn Data Prep or admins of organizations who have a lot of Dataflow and Dataset that will need to be migrated to Recipes. How do you speed up with Data Prep? How do you move your data flow to Recipes? We saw these questions generated online and heard them asked live during our webinars, and now we’re eager to be able to provide further guidance on what to expect going forward.

Here are FAQs on this topic from the CRMA Data Platform team as well as a few currently available resources to get you moving on your Data Prep journey.

CRMA Data Platform currently has a few overlapping options for creating datasets:

(1) dataflows,

(2) the dataset builder, and

(3) recipes.

As recipes and dataflows both prepare data, each approach offers a unique set of transformations that manipulate data. Dataflows and recipes are not mutually exclusive, and you can use both to meet huge data preparation requirements. For example, you can use a dataflow to generate an intermediate dataset, and then use that dataset as the source for a recipe to perform transformations as per the need of data.

New CRMA users can find it difficult to create a new dataset for the first time; where to start, what do the nodes do, which tool should I use and the list of questions that comes ahead. The Data Platform teams acknowledged that:

1- There were gaps in the data preparation tools.

2- The entry for new users is quite difficult and long.

Recipes brought a more powerful and robust tool to the CRMA users as well as made it more approachable for new users to make their data just right for their dashboards. Recipes provide a start-up, visual interface that allows users to easily point and click their way to build recipes that create data and load it into a target such as datasets, Salesforce objects, etc.

Compared to dataflow, recipes are newer and are recommended for performance, functionality, extra features, and simple to use. Recipes allow you to preview the data as you perform transform on it, while dataflows only show your node schema. For example, recipes have more join types and transformations with built-in machine learning functions it provides different transformation functions for differen data types – such as Predict Missing Values and find out Sentiment – that are not available in dataflows. Recipes can also aggregate your data to a higher level. Data Prep Recipes has a lot to offer, and we are not slowing down on innovation either!

With a recipe you can:

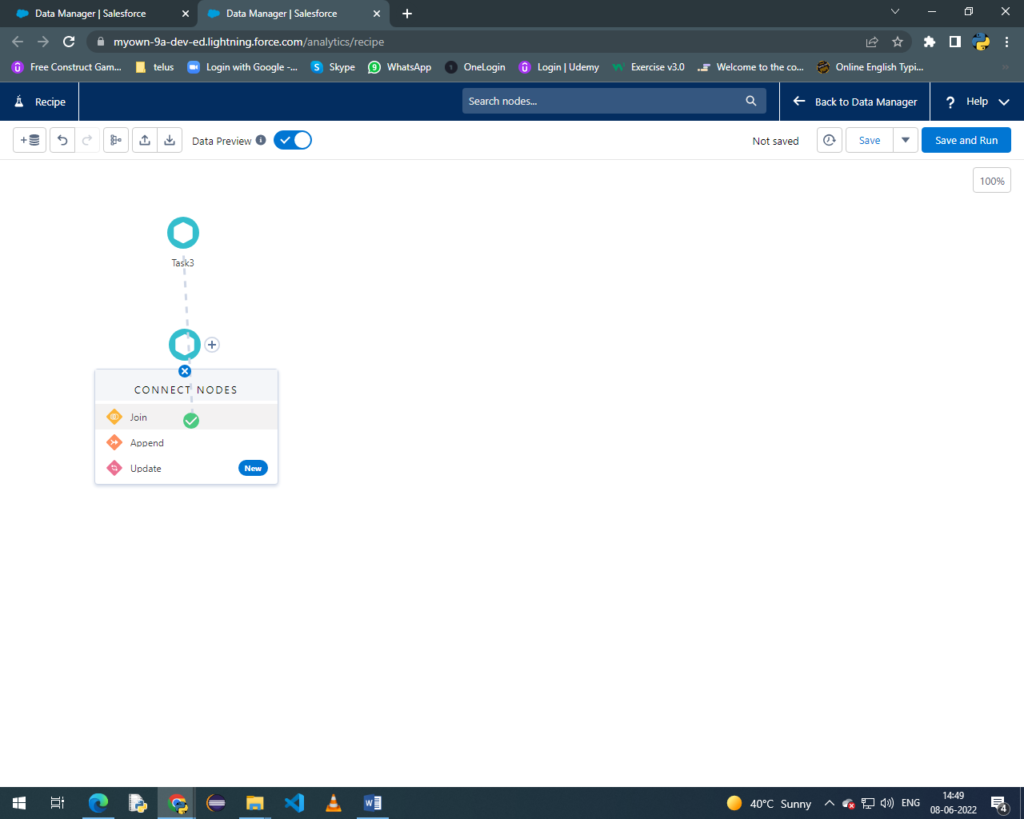

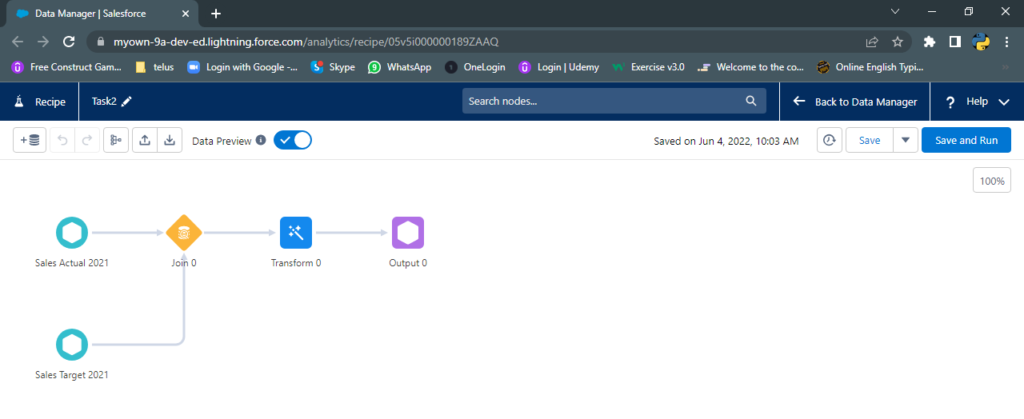

- Combine two or more datasets using the join operation.

- Perform different operations on your data using different operations available in the recipe.

- You can Preview your data and how it changes as you apply each transformation.

- Quickly you can remove columns or change column labels.

- Analyse the quality of your data with column profiles.

- Get smart suggestions about how to create and transform your data.

- Aggregate and join data.

- Use Bucket values without having to write complex SAQL expressions.

- Use built-in machine learning-based transforms to detect sentiment, data clustering, and generate time series-based forecasting.

- Create calculated columns with a visual formula builder and custom formula builder.

- Perform different calculations across rows to derive new data.

- Use a point-and-click interface to easily transform your values to ensure data consistency. For example, you can bucket, trim, split, and replace values without a formula and query.

- Push your prepared data to other systems with output connectors without output node recipe will not be executed.

Can we use our data from dataflow to Recipe?

The best way for you to maintain and keep your organization one step ahead in the future to move your Dataflow’s to Recipes. That can be a large, daunting task for those of you who have become Dataflow specialists or are looking at a giant pile of Dataflows that nobody has touched in a few years. That’s why we are telling you now! We value transparency and feel that the best way for us to ensure a successful transition is to be open about our content and let you tell us what you need to make this a reality.

What can I do now to start preparing my org for navigating dataflow to recipes?

First and foremost, start building new use-cases in Recipe. If you’re working on a new project, take some extra time for what to try to implement it in Recipes and get comfortable. One of the best ways to do that is your using the convert dataflow to recipe tool.

Here are a few important key points:

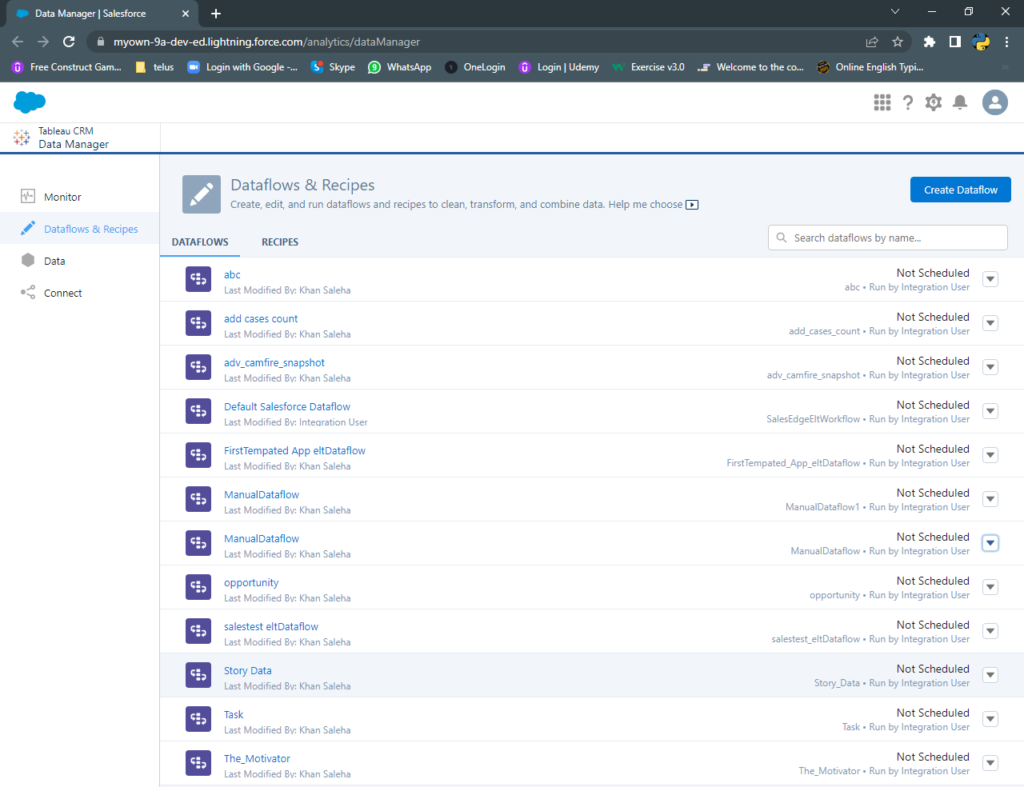

- On the dataflow, the list page, the drop-down button for each dataflow will include a “Convert to Recipe (Beta)” link

o Clicking on that link, and a new browser tab with the converted recipe of your dataflow will be displayed

o You can then save it like a normal recipe

o Dataflow nodes will be mapped to corresponding data prep transformations - Clicking on that link, and a new browser tab with the converted recipe of your dataflow will be displayedThere will be no change in your dataflow.

- The converted recipes are not linked to the source dataflow and can be updated as per need.

- Does not impact existing scheduling or notifications; you can schedule the recipe in place of the source dataflow when you are ready to implement.

- As part of the Beta release, recipe JSON files have a limitation of 800kb only.

What about recipe concurrency?

With the Winter 22 release, existing customers will have the ability to opt-in to make use of dataflow concurrency limits. The total job concurrency will remain as it is.

For Ex. if an org has a dataflow concurrency of 2 dataflows and recipe concurrency of 1, the org will be able to:

- Can Run 3 recipes concurrently

- Can Run 2 recipes and 1 dataflow concurrently

- 3 dataflows will NEVER be permitted to run concurrently

Dataflow concurrency will remain unchanged; as we know we can continue to run 2 dataflows concurrently.

My dataflows are very complex; can recipes still have the ability to cope with Dataflow.

Sure, data prep transformations offer a wide range of new features and recipes are at functional parity with dataflows. Functional parity doesn’t mean there is an exact equivalent of dataflow; for example, field attribute overrides for scale in dataflows are defined as Edit Attribute transforms. Instead of SAQL(Salesforce Analytics Query Language) expressions and functions, recipes support SQL( Structured Query Language) expressions and functions.

There are features in dataflows that are unsupported in data prep recipes (such as SOQL filter expression in the SF digest node). For example; in recipes, direct data simplifies the way you bring Salesforce data into our system. Unsupported features will be documented in Help or help.salesforce.com.

Performance comparison of Dataflow and Recipes:

Generally, recipes will outshine data flows in raw performance. The two platforms have different characteristics, and properties, and therefore performances will depend on the actual implementation. In general, because the unified data platform runs on Apache Spark, larger recipes benefit from distributed computing and will run faster than an equivalent data flow that run on a single host. Please contact Salesforce Support if your converted recipe experiences unexpected performance inclination.

How do I see my job execution details in data prep?

In Data Manager, you can investigate and optimize your recipe jobs with the more detailed Jobs Monitoring page. Data Manager displays historical job execution details for data prep recipes, including wait time, run time, input dataset row counts, processing time, transformation time, output dataset row counts, and creation time if execution is failed then errors are also displayed there.

What are some limitations when using data prep?

- Recipe formulas do not support self-referencing fields.

- Flatten transform supports a source dataset maximum of up to 20M rows limit; performing a flattening operation on more rows can lead to recipe failure.

Why wait? Let’s Start with Data Preparation Using Recipe!

Recipes are the process that runs some instructions to prepare a dataset. Recipes brought a more powerful and robust tool to CRMA users. Recipes provide an intuitive, visual interface that allows users to easily point and click to build recipes that prepare data and load it into a target such as datasets, Salesforce objects, etc.

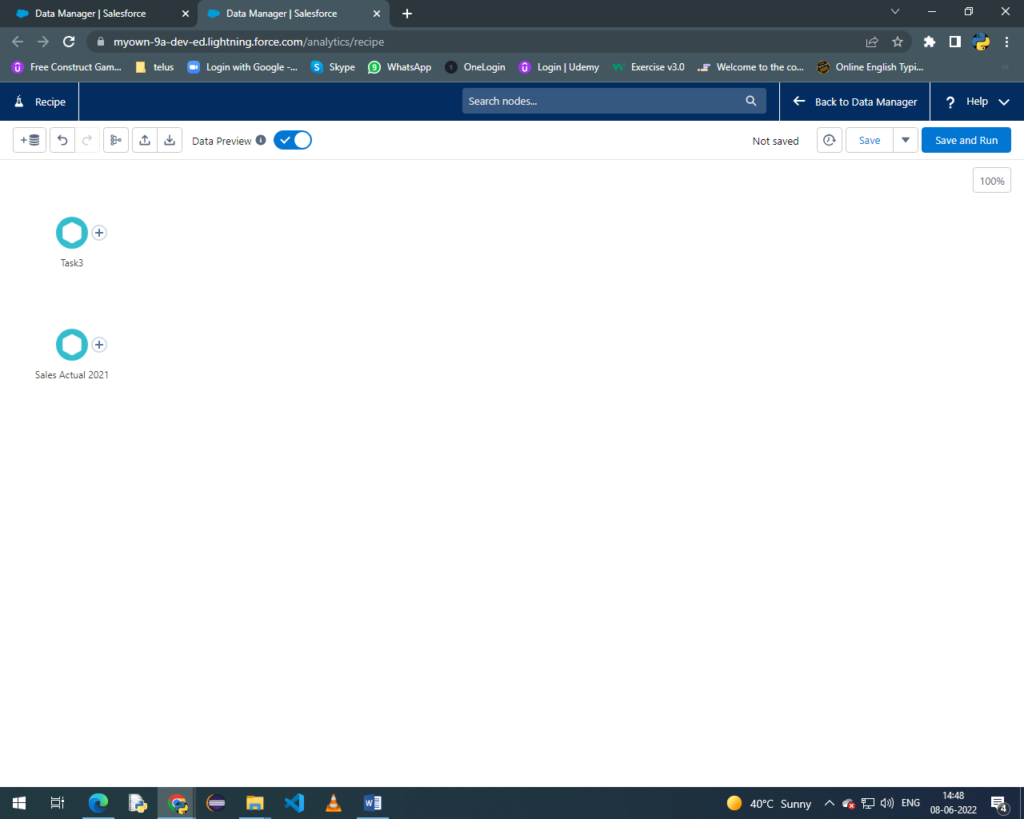

Recipes are friendly UI to prepare and clean the data and combine the multiple datasets in one dataset. It always starts with one or more datasets and can add more and result in the final new dataset.

Creating Recipes:

The simplest way to create Recipes is explained below:

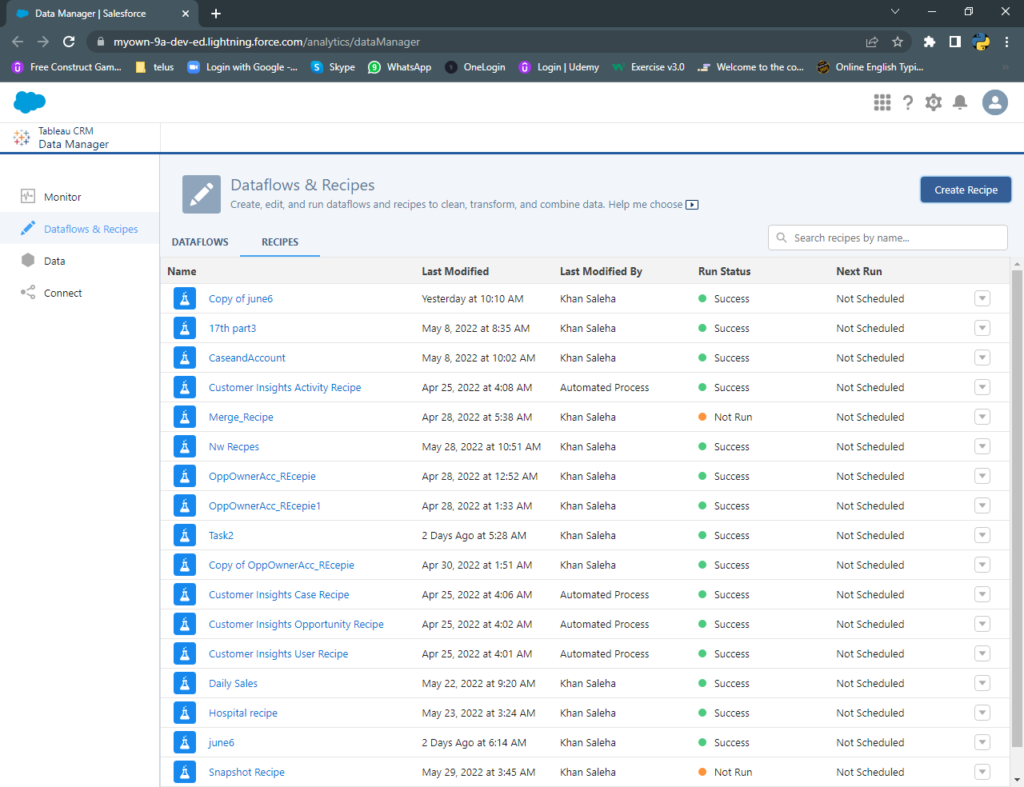

- Go on Analytics Studio.

- Click on Data Manager.

- Select Dataflow and Recipes.

- Click on Recipes.

- Now on the right side Create Recipe is available to click on it.

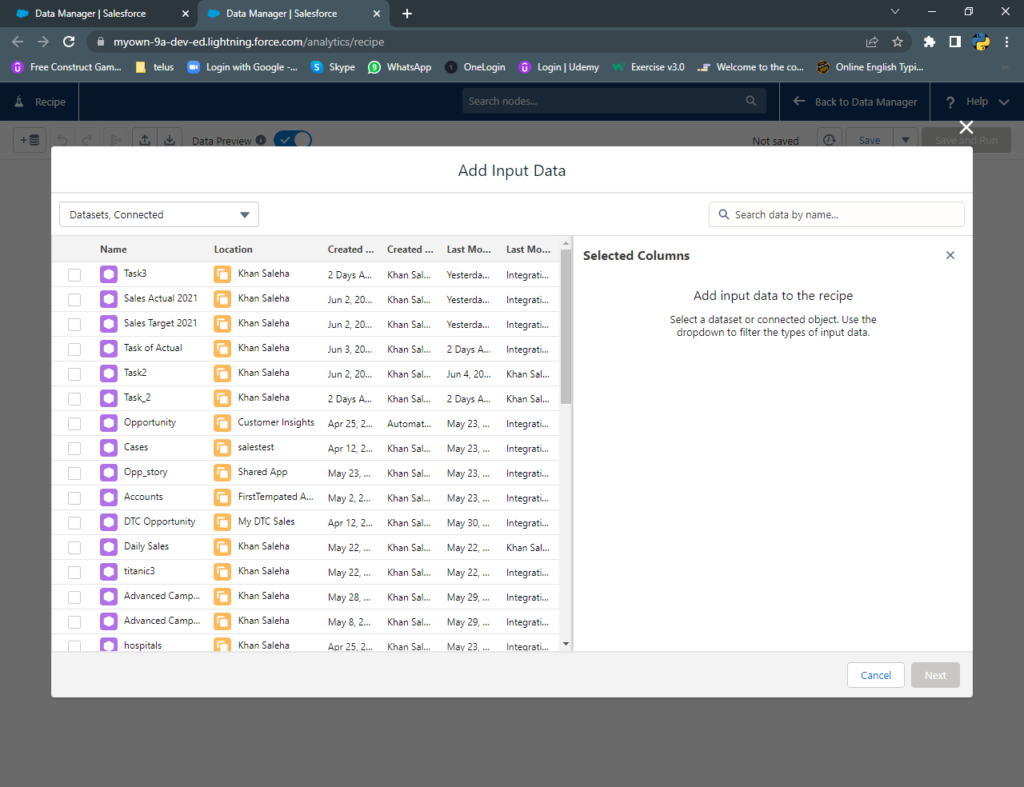

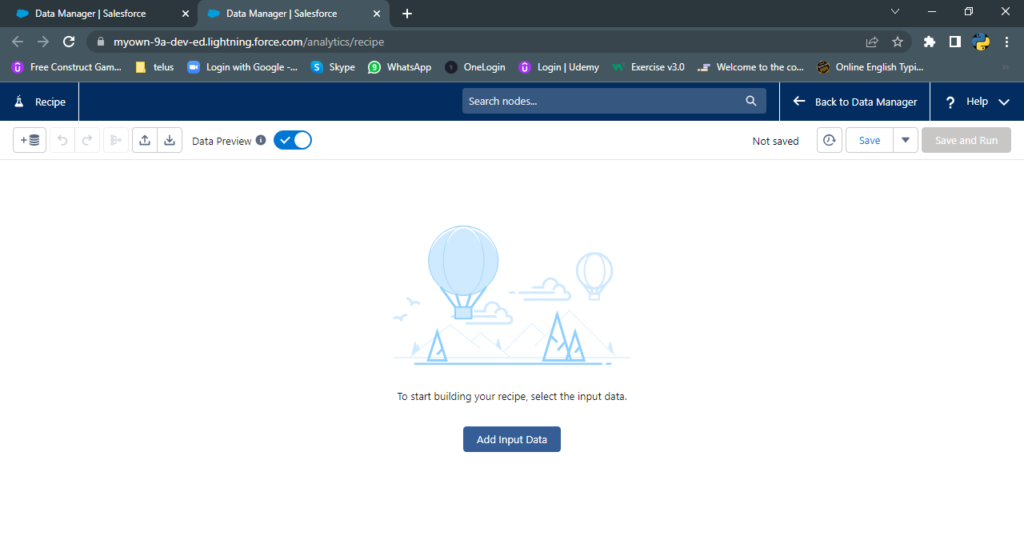

- Now a window is opened to add input data.

- You can choose one or more datasets to create a Recipe.

Click on create Recipe

Click on add data to insert.

Select required dataset/datasets.